What Are Data Center Migration Tools?

Data center migration tools enable the process of relocating data, applications, and workload from one data center environment to another. These tools provide a structured approach, ensuring that the migration process is efficient, minimally disruptive, and secure.

As organizations evolve, they may need to move to new physical locations, adopt cloud solutions, or upgrade their current infrastructure, prompting the need for migration solutions. These tools help IT departments manage these transitions smoothly, reducing the risk of data loss or downtime.

The benefits of using migration tools include simplified processes, automation capabilities, and improved security measures. By leveraging these tools, organizations can mitigate risks associated with migration, such as data breaches or operational disruptions. They also provide valuable insights and analytics to optimize future migrations.

Table of Contents

ToggleKey Features of Data Center Migration Tools

Discovery and Assessment

Discovery and assessment are initial steps in data center migration, focusing on identifying existing assets and assessing their readiness for migration. These stages involve cataloging hardware, software, and network configurations, identifying dependencies, and evaluating workloads.

Assessment tools evaluate this inventory against the target environment requirements, ensuring compatibility and identifying potential issues before they arise. By conducting rigorous assessments, organizations can prioritize workloads based on complexity, sensitivity, and interdependencies, ensuring a smooth transition to new platforms or environments.

Migration Planning and Orchestration

Migration planning involves setting goals, establishing timelines, and assigning roles to team members. Orchestration tools help coordinate complex tasks, provide real-time updates, and automate repetitive processes. The goal is to ensure that all aspects of the migration are synchronized, minimizing the chance of errors and reducing downtime.

A well-orchestrated migration leverages automation to handle complex tasks, reducing the potential for human error. Automation also enables real-time adjustments to address unforeseen challenges.

Data and Application Migration

Data and application migration focus on securely transferring data assets and applications to the new environment. These tools ensure data integrity, security, and consistency during transfer. Migration processes usually include data mapping, transformation, and validation to ensure compatibility with the target system. Automation also helps speed up data transfer while maintaining accuracy and security standards.

Effective data and application migration minimizes risks such as data corruption or application downtime. With pre-defined workflows and automated checks, these tools help maintain business continuity. Comprehensive testing before final cutover ensures that systems operate as expected in the new environment.

Learn more in our detailed guide to application migration strategy

Network and Infrastructure Migration

Network and infrastructure migration involves transitioning network components and physical or cloud-based infrastructures. These tools focus on reconfiguring network paths, ensuring compatibility with new systems, and maintaining connectivity. Infrastructure migration may involve moving servers, storage devices, and other hardware.

Tools used for network and infrastructure migration provide automation and monitoring capabilities. Automation ensures configuration accuracy, while continuous monitoring identifies potential issues early. By focusing on maintaining service levels and connectivity, these tools help organizations transition infrastructure components with minimal impact on users.

Security and Compliance

Migration tools have built-in security measures, such as encryption and access controls, to protect sensitive information. Compliance checks ensure that the migration aligns with industry standards and regulatory requirements, avoiding potential legal or operational repercussions.

Security tools provide detailed audit trails and log management capabilities, allowing organizations to monitor activities during migration. Compliance features help ensure that any changes to systems or data structures are documented and meet regulatory obligations.

Notable Data Center Migration Tools

1. Faddom

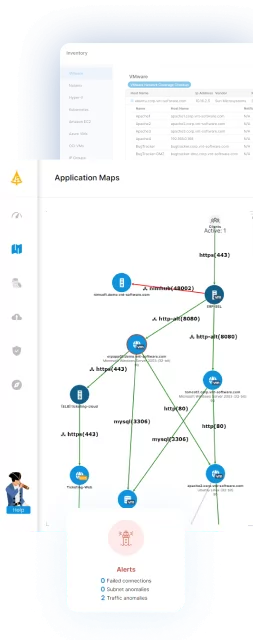

Faddom is an agentless application dependency mapping tool designed to simplify the planning phase of data center migrations. It provides real-time visibility into business applications, server dependencies, and network flows, allowing organizations to build accurate migration strategies with minimal risk. Its visual interface supports wave-based planning and rightsizing decisions for cost-effective, phased transitions across hybrid environments.

Key features include:

- Real-time application dependency mapping: Visualizes all dependencies between servers, applications, and network components without deploying agents.

- Agentless and credential-free deployment: Installs in under 60 minutes without impacting system performance or security.

- Wave-based migration planning: Groups and prioritizes workloads based on actual interdependencies to minimize disruption during phased transitions.

- Rightsizing recommendations: Identifies underutilized resources and redundant infrastructure to optimize future-state environments.

- Hybrid infrastructure visibility: Provides continuous mapping across both on-premises and cloud environments to support complex IT landscapes.

Discover more about Data Center Migration with Faddom

Source: Faddom

2. Fivetran

Fivetran is a data integration tool to simplify and accelerate data migration between different destinations. It automates data replication, enabling movement between on-premises systems, cloud platforms, and data warehouses. Using log-free replication and compressed snapshots, Fivetran ensures secure and continuous data synchronization.

Key features include:

- Destination-to-destination data movement: Allows organizations to replicate data between multiple destinations.

- Log-free replication: Uses read-only access to extract and synchronize data without adding load to source systems.

- Cloud migration support: Enables the transition from on-premises systems to cloud-based data warehouses like Snowflake, BigQuery, and Redshift.

- Automated database replication: Helps in syncing operational databases with analytics environments.

- Data transformation and enrichment: Enables performing analytics and transformations within data warehouses before pushing data back to operational databases.

Source: Fivetran

3. Matillion

Matillion is a cloud-native data integration platform that enables organizations to build and manage data pipelines without coding. It automates data movement, transformation, and orchestration across various cloud data platforms.

Key features include:

- No-code data integration: Simplifies data pipeline creation with a code-free interface.

- Automated data pipelines: Supports batch loading and change data capture (CDC) for data replication.

- AI-powered data engineering: Enables integration of large language models (LLMs), retrieval augmented generation (RAG), and AI-powered data transformations.

- Pre-built connectors: Provides connectivity to popular data sources and allows for custom REST API connectors.

- Pushdown architecture: Processes data within cloud platforms for optimal performance and security.

Source: Matillion

4. Stitch

Stitch is a cloud-based ETL (extract, transform, load) tool that automates data movement from multiple sources to a centralized data warehouse. It enables organizations to set up and maintain data pipelines with minimal technical expertise.

Key features include:

- No-code data integration: Connects and transfers data from multiple sources without code.

- Automated data pipelines: Continuously updates pipelines to ensure fresh data in the warehouse.

- Centralized data management: Consolidates business data into a single location for reporting and analytics.

- Enterprise-grade security: SOC 2 Type II certified with compliance for HIPAA, ISO/IEC 27001, GDPR, and CCPA.

- High reliability: Ensures uptime with a system designed for uninterrupted data flow.

Source: Stitch

5. Hevo Data

Hevo Data is a no-code ELT (extract, load, transform) platform that automates real-time data pipeline management. It enables organizations to integrate data from various sources, including free connectors, and makes it analysis-ready without requiring any coding.

Key features include:

- No-code data integration: Enables data transfer from sources without requiring code.

- Real-time data processing: Supports continuous data migration, ensuring up-to-date insights for analytics.

- Automatic schema mapping: Aligns schema changes between the source and target systems to maintain data consistency.

- Monitoring & alerts: Identifies and notifies users of issues during migration.

- Security & compliance: Provides data encryption in transit and at rest, access control, and compliance checks for regulatory standards.

Source: Hevo Data

6. IBM InfoSphere

IBM InfoSphere is a data integration platform to help organizations manage, cleanse, monitor, and transform their data. It supports massively parallel processing (MPP) for scalability and flexibility, allowing organizations to integrate and govern data across multiple environments, including on-premises, cloud, and hybrid deployments.

Key features include:

- Data integration: Supports flexible ETL processes across multiple systems.

- Scalability with massively parallel processing (MPP): Enables efficient handling of large-scale data transformations and integrations.

- Data governance and standardization: Provides tools to discover IT assets, define common business language, and enforce data governance policies.

- Automated data quality management: Continuously cleanses and monitors data to improve its reliability.

- Multi-deployment support: Works across on-premises, private cloud, public cloud, and hybrid environments.

Source: IBM

7. Integrate.io

Integrate.io is a low-code ETL and reverse ETL platform to automate data processes and ensure data integration. With a drag-and-drop interface and built-in data transformations, it enables both technical and non-technical users to create and manage data pipelines.

Key features include:

- Low-code ETL & reverse ETL: Allows users to build and manage data pipelines with minimal coding.

- Data transformations: Offers a range of pre-built transformations for easy data manipulation.

- Prebuilt connectors & REST API support: Enables data ingestion from various sources with customizable API connectivity.

- Automated scheduling: Supports recurring job execution with code-free scheduling and Cron expressions.

- Logic & dependencies: Allows users to define execution order and dependencies between pipelines and SQL queries.

Source: Integrate.io

8. Airbyte

Airbyte is an open-source ELT platform that provides extensible, customizable data pipelines. It allows users to self-host, manage security, and tailor configurations to their needs. Its integration with existing tools and developer features make it a flexible choice for building data workflows.

Key features include:

- Open-source & extensible: Users can customize and extend the platform to fit data integration needs.

- Prebuilt connectors: Provides a marketplace of connectors or lets users build new ones with the no-code connector builder.

- Self-hosted deployment: Allows control over infrastructure setup, security, and compliance.

- Tool integration: Works with Airflow, Dagster, Prefect, and other data orchestration tools.

- Automation via API & Terraform: Helps manage connections programmatically using API endpoints or configure setups with Terraform.

Source: Airbyte

Conclusion

A well-planned data center migration requires the right tools to ensure efficiency, security, and minimal disruption. These tools help automate critical processes, maintain data integrity, and support compliance with industry standards. By leveraging migration solutions, organizations can reduce risks, simplify transitions, and optimize performance in their new environment.